The AI Security Platform

That Builds Trust

Apply security across the entire AI lifecycle, from development to real-time use.

Trusted By

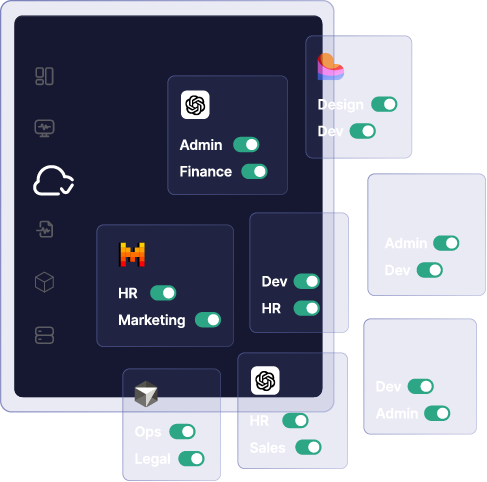

AI security suite for custom apps, AI agents, and users

built to work across any model

Protecting the infinitely expanding AI surface area

beyond the model’s learned space, even

beyond the AI application’s own comprehension

AI generates unpredictable Risk

Only AI-Native Security can comprehend and protect the boundless connections

and intricate logic of AI/LLM.

See actual and validated threats

Protect multimodals including LLM, vision & tabular

See exposures within and across models throughout the AI pipeline

Holistic security & trust protection

Our unique GenAI-built solution for AI security and trust

DeepKeep's AI security includes risk assessments and confidence evaluation, protection, monitoring, and mitigation, covering R&D phase of machine learning models through the entire application lifecycle.

Seen, unseen and unpredictable validated vulnerabilities

Realtime detection, protection and inference

Security and trustworthiness

for holistic protection

Exposure within and across models throughout AI pipelines

Protecting multimodal including LLM, image and tabular data

Physical sources beyond the digital surface area

Why DeepKeep?

Only AI-native security can comprehend and protect the boundless connections and intricate logic of AI/LLM

Only a tightly coupled security & trust solution can identify causes and targeted remedies for security, compliance or operational risk

DeepKeep delivers AI ecosystem security that builds trust.

Get in Touch

How secure is your AI?

Reach out to find out.